Download Project Document/Synopsis

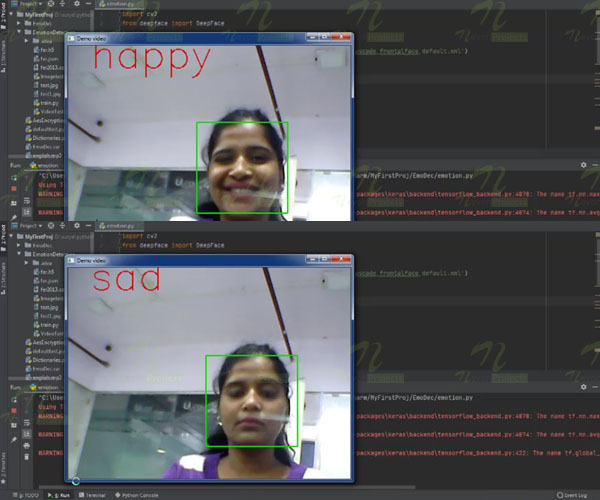

Recognizing facial expressions would help systems to detect if people were happy or sad as a human being can. This will allow software’s and AI systems to provide an even better experience to humans in various applications. From detecting probable suicides and stopping them to playing mood based music there is a wide variety of applications where emotion detection or mood detection can play a vital role in AI applications.

The system works on CNN (convolutional neural network) for extracting the physiological signals and make a prediction. The results can be drawn out by scanning the person’s image through a camera and then correlate it with a training dataset to predict one’s state of emotions.

This system can detect the Live Emotions of the particular user, system compares the information with a training dataset of known emotion to find a match. Different emotion types are detected through the integration of information from facial expressions, body movement and gestures, and speech. The technology is said to contribute in the emergence of the so-called emotional or emotive Internet, Algorithm involve the use of different supervised machine learning algorithms in which a large set of annotated data is fed into the algorithms for the system to learn and predict the appropriate emotion

Advantages

- Can be used in multiple AI tools to get user feedback.

- No need of human intervention.

- High Speed Detection.

Algorithm

- CNN (Convolutional Neural Network)